One of the things that I am really sensitive for is friction. And anytime that I run into that, I am doing an evaluation about how much it is going to cost to remove that. Thee are two sides for this evaluation. There is the pure monetary/time side, and then there is the pure opportunities lost side.

One of the things that I am really sensitive for is friction. And anytime that I run into that, I am doing an evaluation about how much it is going to cost to remove that. Thee are two sides for this evaluation. There is the pure monetary/time side, and then there is the pure opportunities lost side.

The usual evaluation is “it takes 3 minutes to do so manually, and would take a week to resolve in an automatic way”, assuming a standard 40 hours week, we would need to do something for 800 times before it make sense resolve that automatically.

The problem is ignoring the opportunities lost angle. One of the things that most people do not usually consider is that we tend to avoid things that are painful.

If adding content to a website requires you to commit changes to SVN and then log in to a server and update the site, that is about 3 additional minutes that you topped to something that is probably pretty minor. If you are on a slow connection, those can be pretty frustrating 3 minutes. That means that editing the site get batched, so a lot of minor edits are bundled together, until the 3 minutes overhead become insignificant. That means that the site gets updated less often.

If maintaining two profiles of the same application requires that you would merge between slightly different branches (a pretty painful process), they are not going to be in sync. Building a way which allows a single codebase development for multiple profiles is a pretty significant investment, on the other hand. And it introduce complexity into the software.

If committing is going to hit a network resource (and thus take a bit longer), you are going to commit less often. If branching… but you get the point, don’t you?

If releasing your software is a manual process (even to the point of just promoting a CI build to release status), you aren’t going to release very often.

Those are just a few recent examples that I have run to where friction was the deciding factor. In all cases, I put in the time to reduce the friction to the lowest possible level. What I usually look for is the opportunities lost cost, because those tend to be pretty significant.

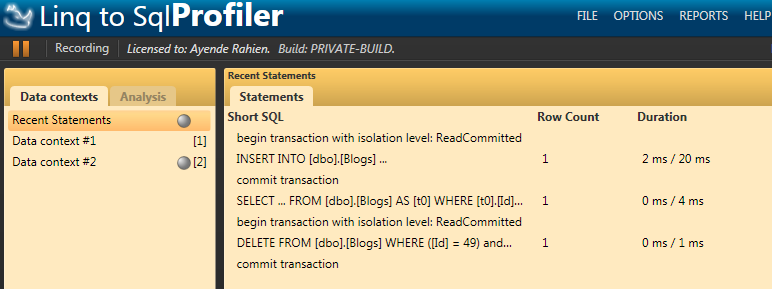

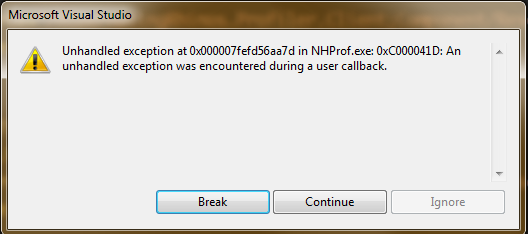

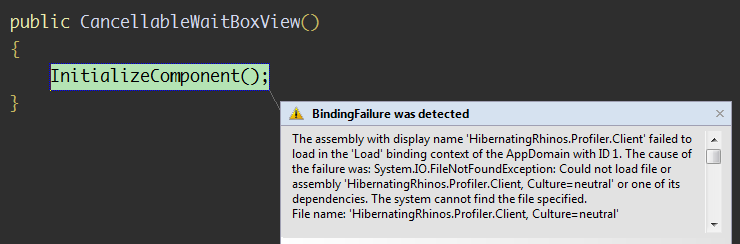

I don’t think about releasing the profiler versions, and I released 20 times in the last three days (5 different builds, times 4 different profiles). The build process for the profiler had me write several custom tools (including my own bloody CI server, for crying out load, a daily build site, upload and registration tools, etc). That decision has justified itself a hundred times over, by ensuring that my work isn’t being hampered by release management, and it a very rapid turn around for releases.

I don’t think about releasing the profiler versions, and I released 20 times in the last three days (5 different builds, times 4 different profiles). The build process for the profiler had me write several custom tools (including my own bloody CI server, for crying out load, a daily build site, upload and registration tools, etc). That decision has justified itself a hundred times over, by ensuring that my work isn’t being hampered by release management, and it a very rapid turn around for releases.

That, in turn, means that we get a lot more confidence from customers (we had a problem and it was resolved in 2 hours). There is actually a separate problem here, that we release too often, but I consider that a better alternative than slow response times for customers.

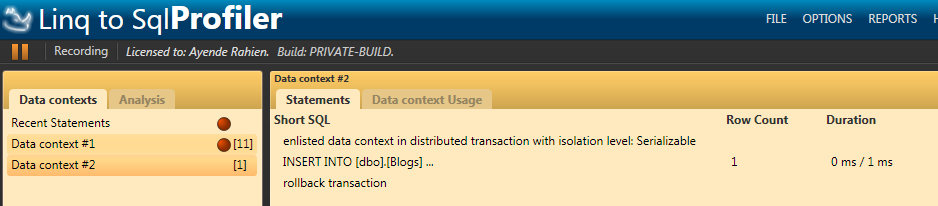

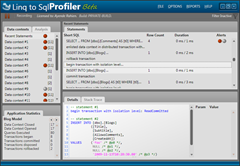

Supporting Linq to Sql as a second target in NH Prof was something that we could do in three days flat. In practice, it took over a month to get to a position where we could properly release it. The time difference was dedicated to doing it right. And no, I am not talking about architectural beauty, or any such thing.

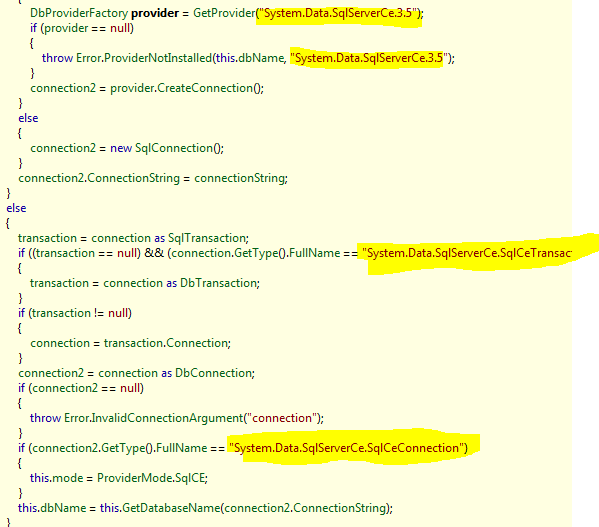

The three days target time for Linq to SQL implies branching the code base and simply making the changes inline. There is relatively very little change from the NH Prof version, and we could just make it all work in a short amount of time.

I am talking about doing it in a way that introduce no additional friction to our work. Right now I am supporting 4 different profiles (NHibernate, Hibernate, Linq to SQL, Entity Framework), and in my wild dreams I am thinking of having 10 or more(!). Frictionless processes means that the additional cost of support each new profile is vastly reduced.

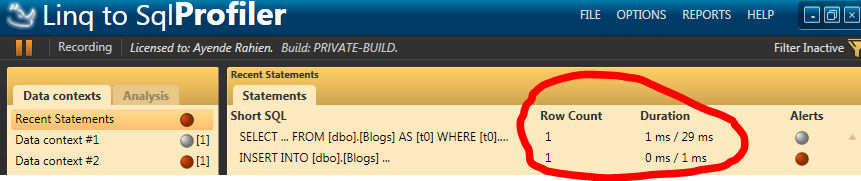

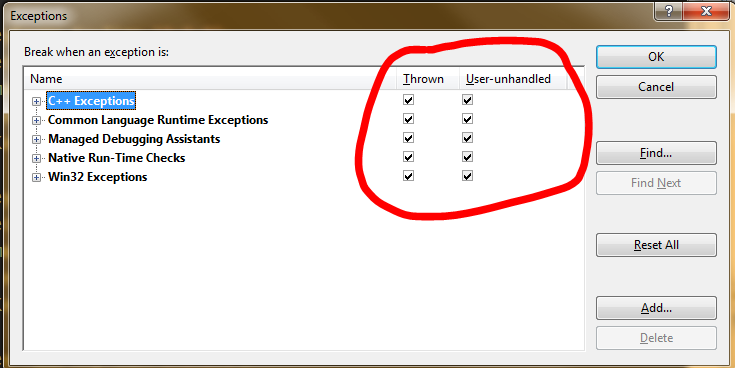

Having different branches for different profiles was considered and almost immediately rejected, it would introduce too much friction, confusion and pain into the development process. Instead, we went with the route on having a single code base that is being branched automatically by the build process. It means that we have a slightly more complex infrastructure, but it also means that we can do it once and touch it only very rarely.

Does it pay off? I would say it does just in terms of not having to consider merging between the different branches whenever I make a change or a bug fix. But it is more than that.

A week ago I didn’t have the Entity Framework Profiler, today it is in a private beta, and in a week or two it is going to go to public beta. All that time spent on integrating Linq to SQL paid itself off when we could just plug in a new profile without really thinking about it.