Kelly Sommers has been commenting about transactional model on twitter.

"Fully ACID writes but BASE reads" - Go back to the books. You can't selectively opt out of guarantees as you wish. What can client observe?

And some other things in the same vien. I am going to assume that this is relevant for RavenDB, based on other tweetss from her. And I think that this requires more space than allowed on twitter. The gist of Kelly's argument, as I understand it, is that RavenDB isn't ACID because it does BASE reads.

I am not sure that I am doing the argument justice, and it is mostly pieced together from a whole bunch of tweets, so I would love to see a complete blog post about it. However, I think that at least with regards to RavenDB, there is a major misconception going on here.

RavenDB is an ACID database, period. If you put data in, you can be sure that the data will be there and you can get it out again. Indeed, the data you put in is immediately consistent. There is no scenario in which you can do any sequence of read/write/read/write and not immediately get the last committed state for that server. What I think is confusing people is the fact that we have implemented BASE queries. So let us go back a bit and discuss that.

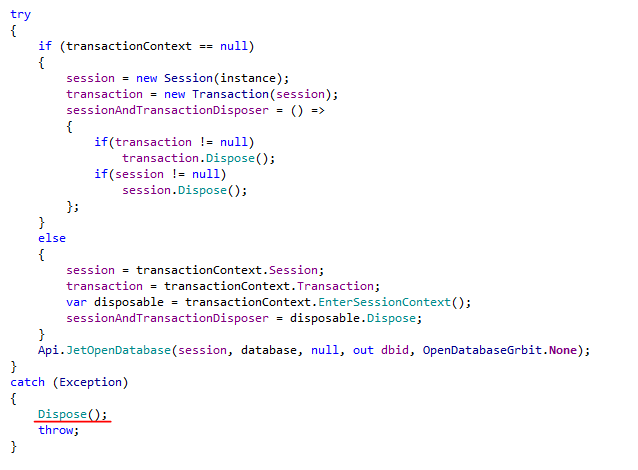

Internally, RavenDB is structure as a set of components. One of those components in the document store, which is responsible for... storing documents (and a lot more besdie, but that isn't very important right now). The document store is ACID. And it ensure consistency, MVCC, durability, and all that other good stuff.

The document store is also limited in the kind of queries that it support. In effect, it supports only the following:

- Document by key

- Document by key prefix

- Documents by update order

When talking about ACID, I am talking about this part of the system, in which you are writing documents and loading them by their id or by update order.

Note that in RavenDB, we use the term "load" to refer to accessing the document store. That is probably the cause for confusion. Loading is never inconsistent and obeys a strict snapshot isolation interpretation of the data. That means that asking something like: "give me users/123" is always going to give you the immediately consistent result.

In constrast to that, RavenDB also have the index store. The index store allows us to create complex queries, and that is subject to eventual consistency. That is by design, and a large part of what makes RavenDB able to dynamically adjust its own behavior at runtime based on production usage.

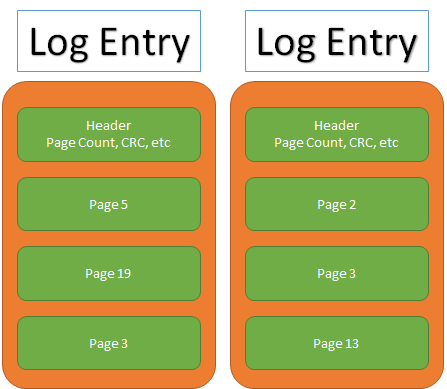

We have a separate background processes that apply the indexes to the incoming data, and write them to the actual index store. This is done in direct contrast to other indexing systems (for example, RDBMS) in which indexes are updated as part of the same transaction. That leads to several interesting results.

Indexes aren't as expensive - In RDBMS, the more indexes you have, the slower your writes become. In RavenDB, indexes have no impact on the write code path,. That means that you can have a lot more indexes without worrying about it too much. Indexes still have cost, of course, but in most system, you just don't feel it.

Lock free reads & writes - Unlike other databases, where you have to pay the indexing cost either on write (common) or read (rare), with RavenDB both read & writes operate without having to deal with the indexing cost. When you make a query, we will immediately give you an answer from the results that we have right now. When you perform a write, we will process that without waiting for indexing.

Dynamic load balancing - because we don't promise immediate consistency, we can detect and scale back our indexing costs in time of high usage. That means that we can shift resources from indexing to answering queries or accepting writes. And we will be able to pick up the slack when the peek relaxes.

Choice - one of the things that we absolutely ensure is that we will tell you when you make a query and we give you results that might not be up to date. That means that when you get the reply, you can make an informed decision. "This query is the result of all the data in the index as of 5 seconds ago" - decide if this is good enough for you or if you want to wait. Unlike other systems, we don't force you to accept whatever consistency model you have. You get to choose if you want to answers I can give you right now, or if you want to wait until we can give you the conistent version, that is up to you.

Smarter indexes - under the hood, RavenDB uses lucene for indexes. Even without counting things like full text or spatial searches, we get a lot more than what you get from the type of indexes you are used to in other systems.

For example, from the MongoDB docs (http://docs.mongodb.org/manual/core/index-compound/):

The following index can support both these sort operations:

However, the above index cannot support sorting by ascending username values and then by ascending date values, such as the following:

In constrant, in RavenDB, it is sufficent that you just tell us what you want to index, and you don't need to worry about creating two indexes (and paying twice the price) if you want to allow users to sort the grid in multiple directions.

On the fly optimiaztions - the decision to separate the document store and the index store to separate components has paid off in ways that we didn't realy predict. Because we have the sepration, and because indexes are background processes that don't really impact the behavior of the rest of the system (outside of resources consumed), we have the freedom to do some interesting things. One of them is live index rebuild.

That means that you can create an index in RavenDB, and the system would go ahead and create it. At the same time, all other operations will go on normally. It can be writes to the document store, it can be updates to the other indexes. Anything at all.

Just to give you some idea about this feature, you'll note that this feature exists only in the Enterprise edition for SQL Server, for example.

And the implications of that are that we can actually create an index in production. In fact, we do so routinely, that is the basis of our ad hoc querying ability. We analyze the query, the query optimizer decides where to dispatch it, and will create the new index if that is needed. There is a whole host of behavior just in this one feature (optimizations, heuroistics, scale back, etc) that we can do, but for the most part, you don't care. You make queries to the server. RavenDB analyze them and product the most efficent indexes to match you actual production load

.Anyway, I am starting to go on about RavenDB, and that is rarely short. So I'll summarize. RavenDB is composed of two distinct parts, the ACID document store, which allow absolute immediate consistency for both reads & writes. And it also have the index store, which is eventually consistent, updated in the background and are always available for queries. They'll also do zero waits (unless you explicitly desire this) for you and give you a much better and more consistent performance.

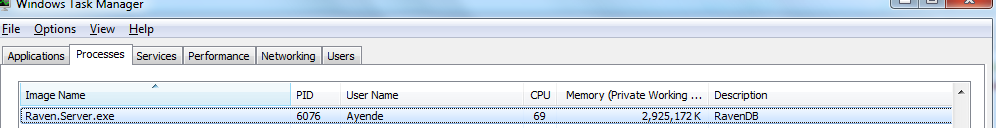

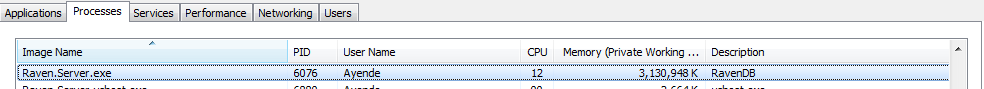

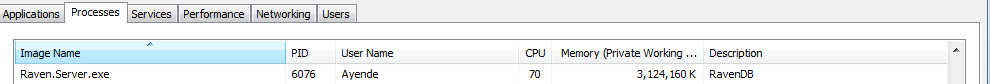

Oh, and a final number to conclude. The average time between a document update in the document store and the results showing up in the index store? That is 23ms*.

(And I blame 15.6ms of that on the clock resolution).