Most developers have been weaned on relational modeling and have to make a non trivial mental leap when the times comes to model data in a non relational manner. That is hard enough on its own, but what happens when the data store that you use actually have multi model capabilities. As an industry, we are seeing more and more databases that take this path and offer multiple models to store and query the data as part of their core nature. For example, ArangoDB, CosmosDB, Couchbase, and of course, RavenDB.

Most developers have been weaned on relational modeling and have to make a non trivial mental leap when the times comes to model data in a non relational manner. That is hard enough on its own, but what happens when the data store that you use actually have multi model capabilities. As an industry, we are seeing more and more databases that take this path and offer multiple models to store and query the data as part of their core nature. For example, ArangoDB, CosmosDB, Couchbase, and of course, RavenDB.

RavenDB, for example, gives you the following models to work with:

- Documents (JSON) – Multi master with any node accepting reads and writes.

- ACID transactions over multiple documents.

- Simple / full text queries.

- Map/Reduce and aggregation queries.

- Binary data – Attachments to documents.

- Counters (Map<string, int64>) – CRDT multi master distributed counters.

- Key/Value – strong distributed consistency via Raft protocol.

- Graph queries – on top of the document model.

- Revisions – built in audit trail for documents

With such a wealth of options, it can be confusing to select the appropriate tool for the job when you need to model your data. In this post, I aim to make sense of the options RavenDB offers and guide you toward making the optimal choices.

The default and most common model you’ll use is going to be the document model. It is the one most appropriate for business data and you’ll typically follow the Domain Driven Design approach for modeling your data and entities. In other words, we are talking about Aggregates, where each document is a whole aggregate. References between entities are either purely local to an aggregate (and document) or only between aggregates. In other words, a value in one document cannot point to a value in another document. It can only point to another document as a whole.

Most of your business logic will be focused on the aggregate level. Even when a single transaction modify multiple documents, most of the business logic is done at each aggregate independently. A good way to handle that is using Domain Events. This allow you to compose independent portions of your domain logic without tying it all in one big knot.

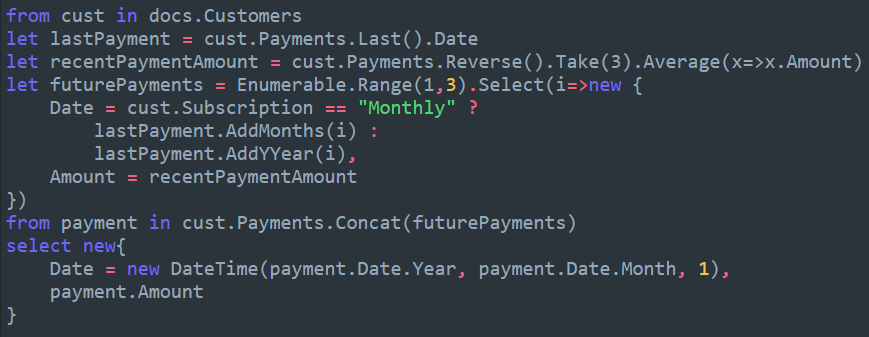

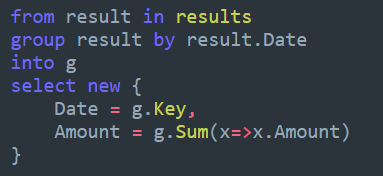

We talked about modifying documents so far, but a large part of what you’ll do with your data is query and present it to users. In these cases, you need to make a conscious and explicit decision. Whatever your display model is going to be based on your documents or a different source. You can use RavenDB ETL to project the data out to a different database, changing its shape to the appropriate view model along the way. RavenDB ETL allow you to replicate a portion of the data in your database to another location, with the ability to modify the results as they are being sent. This can be a great tool to aid you in bridging the domain model and the view model without writing a lot of code.

This is useful for applications that have a high degree of complexity in their domain and business rules. For most applications, you can simply project the relevant data out at query time, but for more complex systems, you may want to have strict physical separation between your read model and the domain model. In such a scenario, Raven ETL can greatly simplify the task of facilitating the task of moving (and transforming) the data from the write side to the read side.

When it comes to modeling, we also need to take into account RavenDB’s map/reduce indexes. These allow you to define aggregation that will run in the background, in other words, at query time, the work has already been done. This in turn leads to blazing fast aggregation queries and can be another factor in the design of the system. It is common to use map/reduce indexes to aggregation the raw data into a more usable form and work with the results. Either directly from the index or use the output collection feature to turn the results of the map/reduce index to real documents (which can be further indexes, aggregated, etc).

Thus far, we have only touched on document modeling, mind. There are a bunch of other options as well. We’ll start from the simplest option, attachments. At its core, an attachment is just that. A way to attach some binary data to the document. As simple as it sounds, it has some profound implications from a modeling point of view. The need to store binary data somewhere isn’t new, obviously and there have been numerous ways to resolve it. In a relational database, a varbinary(max) column is used. In a document database, I’ve seen the raw binary data stored directly in the document (either as raw binary data or as BASE64 encoded value). In most cases, this isn’t a really good idea. It blow up the size of the document (and the table) and complicate the management of the data. Storing the data on the file system lead to a different set of problems, coordinating transactions between the database and the file system, organizing the data, securing paths such as “../../etc/passwd”, backups and restore, and many more.

Attachments

These are all things that you want your database to handle for you. At the same time, binary data is related to but not part of the document. For those reasons, we use the attachment model in RavenDB. This is meant to be viewed just like attachments in email. The binary data is not stored inside the document, but it is strongly related to it. Common use cases for attachments include profile picture for a user’s document, the signatures on a lease document, the excel spreadsheet with details about a loan for a payment plan document or the associated pictures from a home inspection report. A document can have any number of attachments, and an attachment can be of any size. This give you a lot of freedom to attach (pun intended) additional data to your documents without any hassle. Like documents, attachments also work in multi master mode and will be replicated across the cluster with the document.

Counters

While attachments can be any raw binary data and has only a name (and optional mime type) for structure, counters are far more strictly defined. A counter is… well, a counter. It count things. On the most basic level, it is just a named 64 bits integer that is associated with a document. And like attachments, a document may have any number of such counters. But why is it important to have a 64 bits integer attached to the document? How could something so small can be important enough that we would need a whole new concept for it? After all, couldn’t we just store the same counter more simply as a property inside the document?

To understand why RavenDB have counters, we need to understand what they aren’t. The are related to the document, but not of the document. That means that an update to the counter is not going to modify the document as a whole. This, in turn, means that operations on the counters can be very cheap, regardless of how many counters you have in a document or how often you modify the counter. Having the counter separate from the document allow us to do several important things:

- Cheap updates

- Distributed modifications

In a multi master cluster, if any node can accept any write, you need to be aware of conflicts, when two updates to the same value were made on two disjoint servers. In the case of documents, RavenDB detect and resolve it according to the pre-defined policy. In the case of counters, there is no such need. A counter in RavenDB is stored using a CRDT. This is a format that allow us to handle concurrent modifications to the same value without losing data or expensive locks. This make counter suitable for for values that changes often. Good examples of counters is tracking views on a page or an ad, you can distribute the operations on a number of servers and still reach the correct final tally. This works both for increment and decrement, obviously.

Given that counters are basically just map<string, int64> you might expect that there isn’t any modeling work to be done here, right? But it turns out that there is actually quite a bit that can be done even with that simple an interface. For example, when tracking views on a page or downloads for a particular package, I’m interested not only in the total number of downloads, but also in the downloads per day. In such a case, whenever we want to note another download, we’ll increment both the counter for overall download and another counter for downloads for that particular day. In other words, the name of the counter can hold meaningful information.

Key/Value

So far, all the data we have talked about were stored and accessed in a multi-master manner. In other words, we could chose any node in the cluster and make a write to it and it would be accepted. Data that is modified on multiple nodes at the same time would either be merged (counters), stored (attachments) or resolved (documents). This is great when you care about the overall availability of your system, we are always accepting writes and always proceed forward. But that isn’t always the case, there are situations where you might need to have a higher degree of consistency in your operations. For example, if you are selling a fixed number of items, you want to be sure that two buyers hitting “Purchase” at the same time don’t cause you problems just because their requests used different database servers.

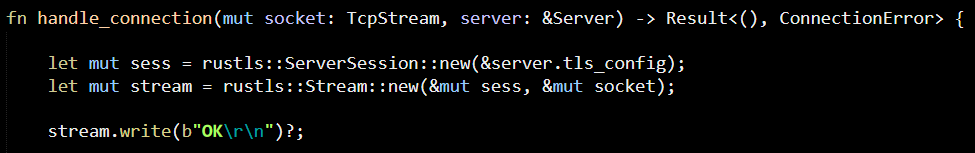

In order to handle this situation, RavenDB offers the Cmp Xcng model. This is a cluster wide key/value store for your database, it allows you to store named values (integer, strings or JSON objects) in a consistent manner. This feature allow you to ensure consistent behavior for high value data. In fact, you can combine this feature with cluster wide transactions.

Cluster wide transactions allow you to combine operations on documents with Cmp Xcng ops to create a single consistent transaction. This mode enable you to perform conditional operations to modify your documents based on the globally consistent Cmp Xcng values.

A good example for Cmp Xcng values include pessimistic locks and their owners, to generate a cluster wide lock that is guaranteed to be consistent and safe regardless of what is going on with the cluster. Other examples can be to store global configuration for your system in all the nodes in the cluster.

Graph Queries

Graph data stores are used to hold data about nodes and the edges between them. They are a great tool to handle tasks such as social network, data mining and finding patterns in large datasets. RavenDB, as of release 4.2, has support for graph queries, but it does so in a novel manner. A node in RavenDB is a document, quite naturally, but unlike other features, such as attachments and counters, edges don’t have separate physical existence. Instead, RavenDB is able to use the document structure itself to infer the edges between documents. This dynamic nature means that when the time comes to apply graph queries on top of your existing database, you don’t have to do a lot of prep work. You can start issuing graph queries directly, and RavenDB will work behind the scenes to make sure that all the data is found, and quickly, too.

The ability to perform graph queries on your existing document structure is a powerful one, but it doesn’t alleviate the need to model your data properly to best take advantage of this. So what does this mean, modeling your data to be usable both in a document form and for graph operations? Usually, when you need to model your data in a graph manner, you think mostly in terms of the connection between the nodes.

One way of looking at graph modeling in RavenDB is to be explicit about the edges, but I find this awkward and limiting. It is usually better to express the domain model naturally and allow the edges to pop up from the underlying data as you work with it. Edges in RavenDB are properties (or nested objects) that contain a reference to another document. If the edge in a nested object, then all the properties of the object are also the properties on the edge and can be filtered upon.

For best results, you want to model your edge properties as a single nested object that can be referred to explicitly. This is already a best practice when modeling your data, for better cohesiveness, but graph queries make this a requirement.

Unlike other graph databases, RavenDB isn’t limited to just graph representation. A graph queries in RavenDB is able to utilize the full power of RavenDB queries, which means that you can start your graph operation with a spatial query and then proceed to the rest of the graph pattern match. You should aim to do most of the work in the preparatory queries and not spend most of the time in graph operations.

A common example of graph operation is fraud detection, with graph queries to detect multiple orders made using many different credit card for the same address. Instead of trying to do the matches using just graph operations, we can define a source query on a map/reduce index that would aggregate all the results for orders on the same address. This would dramatically cut down on the amount of work that the database is required to do to answer your queries.

Revisions

The final topic that I want to discuss is this (already very long) post is the notion of Revisions. RavenDB allow the database administrator to define a revisions policy, in which case RavenDB will maintain, automatically and transparently, an immutable log of all changes on documents. This means that you have a built-in audit trail ready for use if you need to. Beyond just having an audit trail, revisions are also very important feature in several key capabilities in RavenDB.

For example, using revisions, you can get the tuple of (previous, current) versions of any change made in the database using subscriptions. This allow you to define some pretty interesting backend processes, which have full visibility to all the changes that happen to the document over time. This can be very interesting in regression analysis, applying business rules and seeing how the data changes over time.

Summary

I tried to keep this post at a high level and not get bogged down in the details. I’m probably going to have a few more posts about modeling in general and I would appreciate any feedback you may have or any questions you can raise.

![]() .

.