This was an interesting support call, about six months ago, if I recall correctly. It started with a customer calling because his database would sometimes stop responding for minutes (up to 30 minutes in some cases), and only when there was a high load on the system.

We started looking at this, and it was really strange. They could reliably reproduce this on their production system, but only there, and only under pretty high load. Simulating the same load on a non production machine would have no impact whatsoever. Tripling the load on a non production machine had no impact either.

We asked the customer to send us the debug logs, and we found an interesting tidbit of information in there. The issue always happened when the server was loading a database. The sequence of events went something like this:

- Lots of users on the site, using the (multiple) databases on the server.

- For some reason (lunch, meeting, whatever) there is a period of an hour or so when there is no activity on one part of the system, which meant that there are no requests for a particular database.

- RavenDB would unload the database and release all resources associated with it.

- After that period of time, and while the server is still in high load, requests would resume for that database. Usually in a big burst of requests for that database.

- RavenDB would start loading the database.

- All requests would start hanging.

- About 15 – 25 minutes later, RavenDB would start the database.

- All timing information in the logs indicated that there was no long operation.

- Everything return to normal.

To be honest, this looked a look like someone suspended the entire process somehow. CPU during this time was effectively zero, memory usage was fine and there was no I/O.

The quick workaround was to increase the database idle time to account for the breaks in activity. With the database no longer loading and unloading under load, there was no issue.

Now it was the time to try to figure out what the hell was going on. Going over the logs, we could see that there was a pretty big gaps in the initialization order of the database. To be exact, the initialization of the indexes took almost all the time. But why? Looking at each individual index, it took very little time to initialize, and even all of them together didn’t take a long time. But the time from starting the index initialization until the first index started to initialize was very high.

A few years ago we sped up the initialization times for large RavenDB databases by parallelizing the initialization of all indexes. That was the first clue. Something in this parallel initialization wasn’t working.

And there was also the load factor to consider. The problem only surfaced under load, and to be more precise, under load when we had multiple databases involved, but the issue affected the entire server.

When RavenDB get a request for an unloaded database, it starts loading it in a background thread and the request waits until the database is loaded.

Combine all of those together, and you might start seeing the answer.

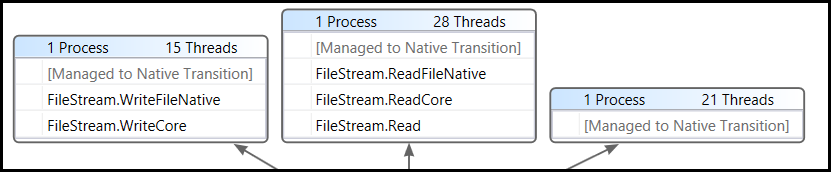

RavenDB has an unloaded database, and we suddenly get a high number of requests for that database, all at the same time. RavenDB will start a background task loading the database, and wait until it is loaded to complete the request. However, because we have a lot of requests to this database, we have a lot of threads that are now waiting for it to complete.

Meanwhile, the database is loading in a background thread, and needs to initialize its indexes. It does this in parallel, using the thread pool…

The same thread pool that we serve requests from…

The same thread pool where we have a lot of threads that are currently waiting for the database to load…

The same database that is now waiting for tasks to complete on that busy thread pool…

If you had things going just right, you would get into a situation where the database couldn’t load, because all the thread pool threads it needed to start were busy waiting for the database to load.

Now, the thread pool would slowly increase the number of threads to take care of the amount of work it had. But those threads would usually be kept busy with more requests to the loading database.

Eventually, the index initialization would go through, the database would complete loading, all the database requests would complete, and the system would go back to normal. But in the meantime… ouch!

The temporary workaround, as I mentioned, was increasing the database idle time to prevent this situation from happening. The long term solution was to limit the amount of time that a thread would wait for a request to load, as well as limit the number of threads that could wait for a database to load. The first five requests for an unloaded database would wait up to ten seconds for that database to load, any additional requests would return with a service unavailable code immediately.

We also made sure that waiting for a database to load can be done in an asynchronous manner and in 3.5 we have a dedicated thread pool for internal RavenDB tasks. But I’ll have another post about that.