I recently got an estimate for a feature that I wanted to add to NH Prof. It was for two separate features, actually, but they were closely related.

That estimate was for 32 hours.

And it caused me a great deal of indigestion. The problem was, quite simply, that even granting that there is the usual padding of estimates (which I expect), that timing estimate was off, way off. I knew what would be required for this feature, and it shouldn’t be anywhere near complicated enough to require 4 days of full time work. In fact, I estimated that it would take me a maximum of 6 hours and a probable of 3 hours to get it done.

Now, to be fair, I know the codebase (well, actually that isn’t true, a lot of the code for NH Prof was written by Rob & Christopher, and after a few days of working with them, I stopped looking at the code, there wasn’t any need to do so). And I am well aware that most people consider me to be an above average developer.

I wouldn’t have batted an eye for an estimate of 8 – 14 hours, probably. Part of the reason that I have other people working on the code base is that even though I can do certain things faster, I can only do so many things, after all.

But a time estimate that was 5 – 10 times as large as what I estimated was too annoying. I decided that this feature I am going to do on my own. And I decided that I wanted to do this on the clock.

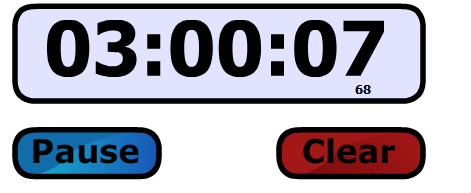

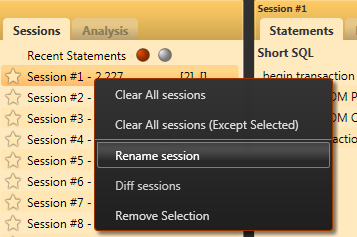

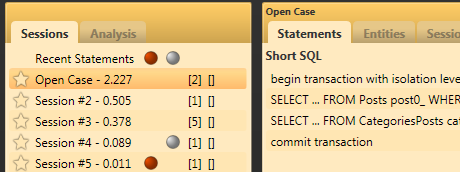

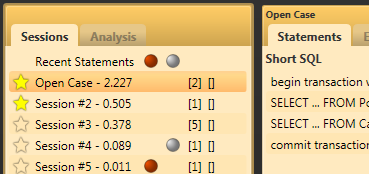

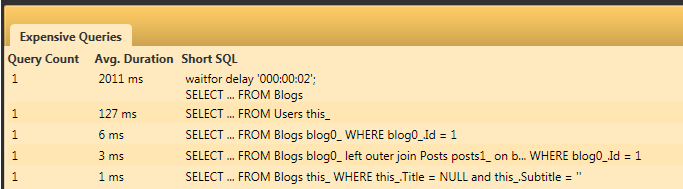

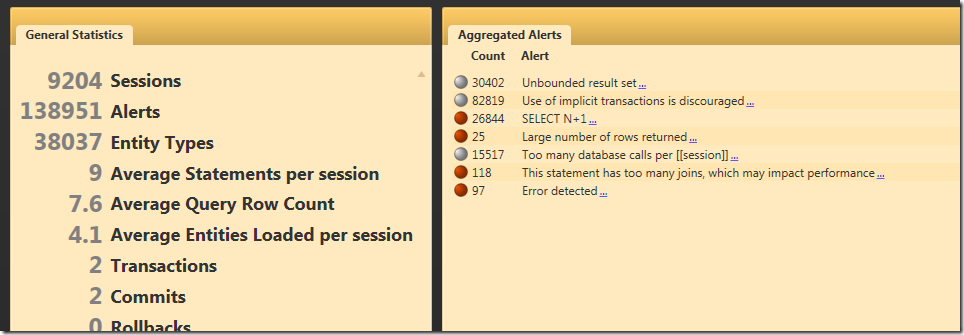

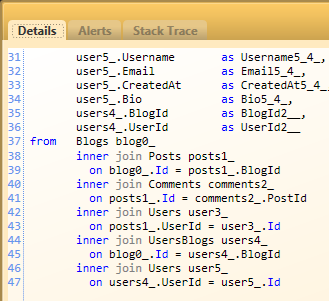

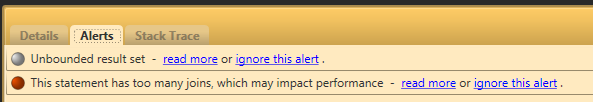

The result is here:

This is actually total time over three separate sittings, but the timing is close enough to what I though it would be.

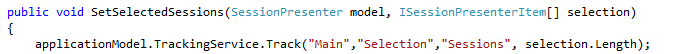

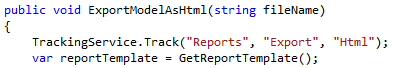

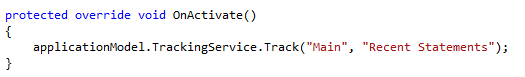

This includes everything, implementing the feature, unit testing it, wiring it up in the UI, etc.

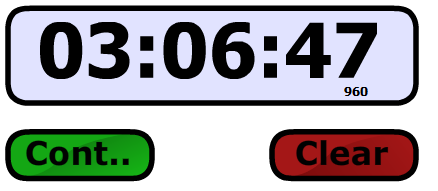

The only thing remaining is to add the UI works for the other profilers (Entity Framework, Linq to SQL, Hibernate and the upcoming LLBLGen Profiler) . Doing this now…

And we are done.

I have more features that I want to implement, but in general, if I pushed those changes out, they would be a new release that customers can use immediately.

Nitpicker corner: No, I am not being ripped off. And no, the people making the estimates aren't incompetent. To be perfectly honest, looking at the work that they did do and the time marked against it, they are good, and they deliver in a reasonable time frame. What I think is going on is that their estimates are highly conservative, because they don't want to get into a bind with "oh, we run into a problem with XYZ and overrun the time for the feature by 150%".

That also lead to a different problem, when you pay by the hour, you really want to have estimates that are more or less reasonably tied to your payments. But estimating with hours has too much granularity to be really effective (a single bug can easily consume hours, totally throwing off estimation, and it doesn't even have to be a complex one.)