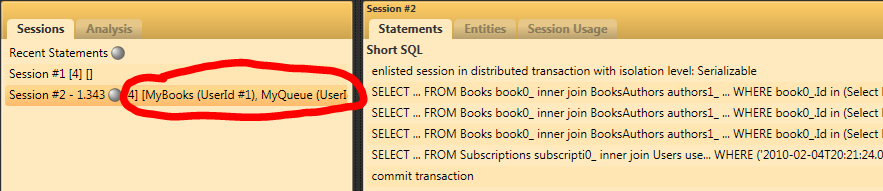

One of the things that I began doing since starting to work on multiple OR/M Profilers is to compare how all of them are handling a particular task. This is by no means a comparative analysis, but it is an interesting data point.

The scenario in question is loading a blog with all its posts and comments.

Let us start with NHibernate:

var blogs = s.CreateQuery(

@"from Blog b

left join fetch b.Posts p

left join fetch p.Comments

where b.Id = :id")

.SetParameter("id", 1)

.List<Blog>();

Will generate the following SQL

select blog0_.Id as Id7_0_,

posts1_.Id as Id0_1_,

comments2_.Id as Id2_2_,

blog0_.Title as Title7_0_,

blog0_.Subtitle as Subtitle7_0_,

blog0_.AllowsComments as AllowsCo4_7_0_,

blog0_.CreatedAt as CreatedAt7_0_,

posts1_.Title as Title0_1_,

posts1_.Text as Text0_1_,

posts1_.PostedAt as PostedAt0_1_,

posts1_.BlogId as BlogId0_1_,

posts1_.UserId as UserId0_1_,

posts1_.BlogId as BlogId0__,

posts1_.Id as Id0__,

comments2_.Name as Name2_2_,

comments2_.Email as Email2_2_,

comments2_.HomePage as HomePage2_2_,

comments2_.Ip as Ip2_2_,

comments2_.Text as Text2_2_,

comments2_.PostId as PostId2_2_,

comments2_.PostId as PostId1__,

comments2_.Id as Id1__

from Blogs blog0_

left outer join Posts posts1_

on blog0_.Id = posts1_.BlogId

left outer join Comments comments2_

on posts1_.Id = comments2_.PostId

where blog0_.Id = 1 /* @p0 */

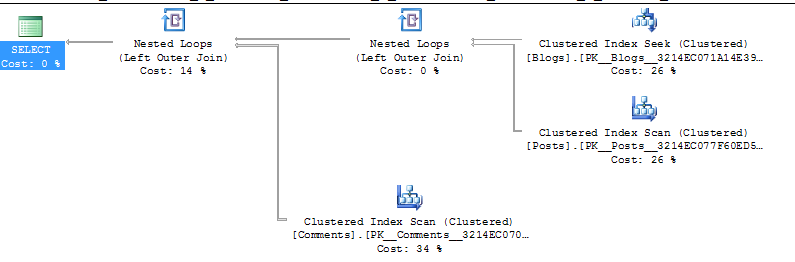

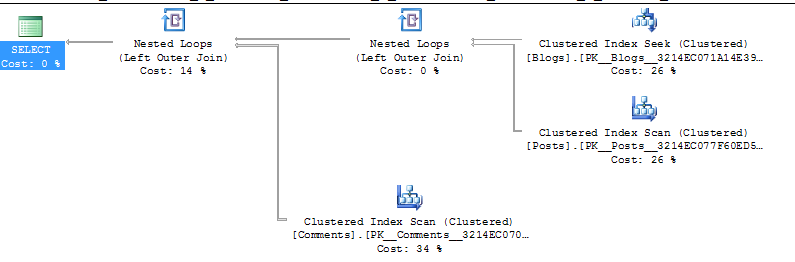

This result in a fairly simple query plan:

However, you should note that this also result in a Cartesian product, which may not be what you wanted.

Linq to SQL doesn’t really provide a good way to express what I wanted, but it does get the job done:

var dataLoadOptions = new DataLoadOptions();

dataLoadOptions.LoadWith<Blog>(x => x.Posts);

dataLoadOptions.LoadWith<Post>(x => x.Comments);

using (var db = new BlogModelDataContext(conStr)

{

LoadOptions = dataLoadOptions

})

{

db.Blogs.Where(x => x.Id == 1).ToList();

}

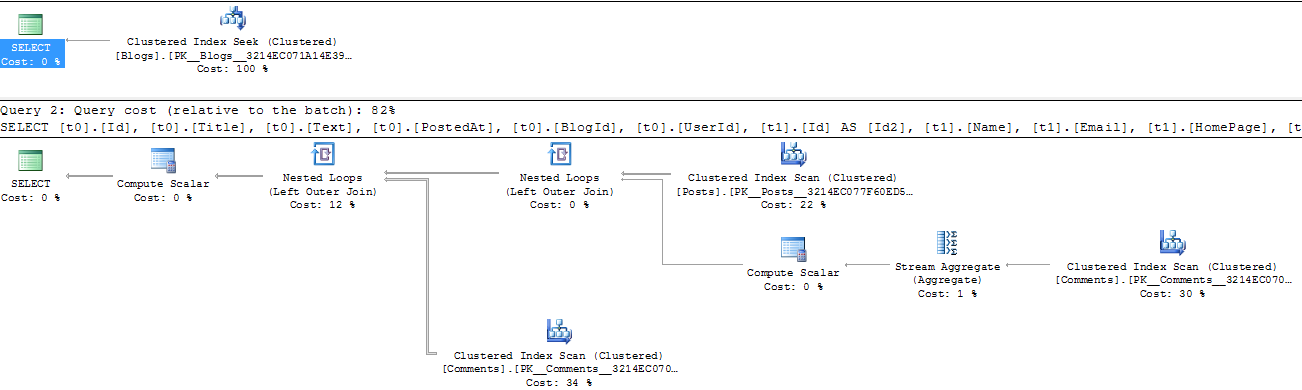

Interestingly enough, this does not generate a single query, but two queries:

-- statement #1

SELECT [t0].[Id],

[t0].[Title],

[t0].[Subtitle],

[t0].[AllowsComments],

[t0].[CreatedAt]

FROM [dbo].[Blogs] AS [t0]

WHERE [t0].[Id] = 1 /* @p0 */

-- statement #2

SELECT [t0].[Id],

[t0].[Title],

[t0].[Text],

[t0].[PostedAt],

[t0].[BlogId],

[t0].[UserId],

[t1].[Id] AS [Id2],

[t1].[Name],

[t1].[Email],

[t1].[HomePage],

[t1].[Ip],

[t1].[Text] AS [Text2],

[t1].[PostId],

(SELECT COUNT(* )

FROM [dbo].[Comments] AS [t2]

WHERE [t2].[PostId] = [t0].[Id]) AS [value]

FROM [dbo].[Posts] AS [t0]

LEFT OUTER JOIN [dbo].[Comments] AS [t1]

ON [t1].[PostId] = [t0].[Id]

WHERE [t0].[BlogId] = 1 /* @x1 */

ORDER BY [t0].[Id],

[t1].[Id]

The interesting bit is that while there are two queries here, this method does not generate a Cartesian product, so I have to consider this a plus. What I would like to know is whatever this is intentionally so or just a result of the way Linq to SQL eager loading is structured.

The query plan for this is simple as well:

Finally, Entity Framework (both 3.5 and 4.0), using this code:

db.Blogs

.Include("Posts")

.Include("Posts.Comments")

.Where(x => x.Id == 1)

.ToList();

This code will generate:

SELECT [Project2].[Id] AS [Id],

[Project2].[Title] AS [Title],

[Project2].[Subtitle] AS [Subtitle],

[Project2].[AllowsComments] AS [AllowsComments],

[Project2].[CreatedAt] AS [CreatedAt],

[Project2].[C1] AS [C1],

[Project2].[C4] AS [C2],

[Project2].[Id1] AS [Id1],

[Project2].[Title1] AS [Title1],

[Project2].[Text] AS [Text],

[Project2].[PostedAt] AS [PostedAt],

[Project2].[BlogId] AS [BlogId],

[Project2].[UserId] AS [UserId],

[Project2].[C3] AS [C3],

[Project2].[C2] AS [C4],

[Project2].[Id2] AS [Id2],

[Project2].[Name] AS [Name],

[Project2].[Email] AS [Email],

[Project2].[HomePage] AS [HomePage],

[Project2].[Ip] AS [Ip],

[Project2].[Text1] AS [Text1],

[Project2].[PostId] AS [PostId]

FROM (SELECT [Extent1].[Id] AS [Id],

[Extent1].[Title] AS [Title],

[Extent1].[Subtitle] AS [Subtitle],

[Extent1].[AllowsComments] AS [AllowsComments],

[Extent1].[CreatedAt] AS [CreatedAt],

1 AS [C1],

[Project1].[Id] AS [Id1],

[Project1].[Title] AS [Title1],

[Project1].[Text] AS [Text],

[Project1].[PostedAt] AS [PostedAt],

[Project1].[BlogId] AS [BlogId],

[Project1].[UserId] AS [UserId],

[Project1].[Id1] AS [Id2],

[Project1].[Name] AS [Name],

[Project1].[Email] AS [Email],

[Project1].[HomePage] AS [HomePage],

[Project1].[Ip] AS [Ip],

[Project1].[Text1] AS [Text1],

[Project1].[PostId] AS [PostId],

CASE

WHEN ([Project1].[C1] IS NULL) THEN CAST(NULL AS int)

ELSE CASE

WHEN ([Project1].[Id1] IS NULL) THEN CAST(NULL AS int)

ELSE 1

END

END AS [C2],

CASE

WHEN ([Project1].[C1] IS NULL) THEN CAST(NULL AS int)

ELSE CASE

WHEN ([Project1].[Id1] IS NULL) THEN CAST(NULL AS int)

ELSE 1

END

END AS [C3],

[Project1].[C1] AS [C4]

FROM [dbo].[Blogs] AS [Extent1]

LEFT OUTER JOIN (SELECT [Extent2].[Id] AS [Id],

[Extent2].[Title] AS [Title],

[Extent2].[Text] AS [Text],

[Extent2].[PostedAt] AS [PostedAt],

[Extent2].[BlogId] AS [BlogId],

[Extent2].[UserId] AS [UserId],

[Extent3].[Id] AS [Id1],

[Extent3].[Name] AS [Name],

[Extent3].[Email] AS [Email],

[Extent3].[HomePage] AS [HomePage],

[Extent3].[Ip] AS [Ip],

[Extent3].[Text] AS [Text1],

[Extent3].[PostId] AS [PostId],

1 AS [C1]

FROM [dbo].[Posts] AS [Extent2]

LEFT OUTER JOIN [dbo].[Comments] AS [Extent3]

ON [Extent2].[Id] = [Extent3].[PostId]) AS [Project1]

ON [Extent1].[Id] = [Project1].[BlogId]

WHERE 1 = [Extent1].[Id]) AS [Project2]

ORDER BY [Project2].[Id] ASC,

[Project2].[C4] ASC,

[Project2].[Id1] ASC,

[Project2].[C3] ASC

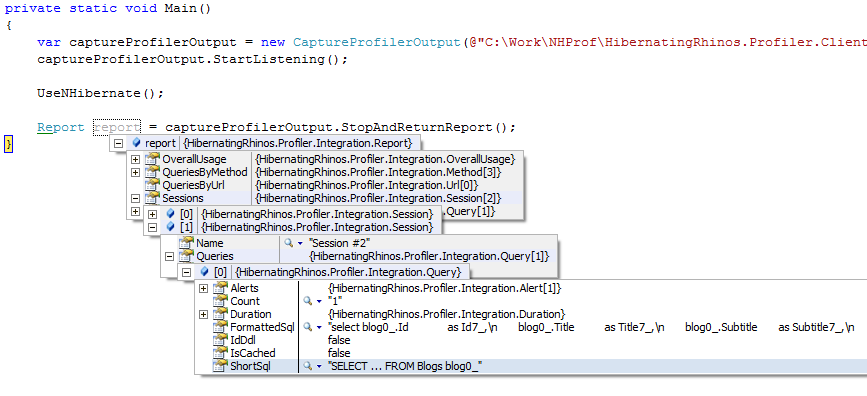

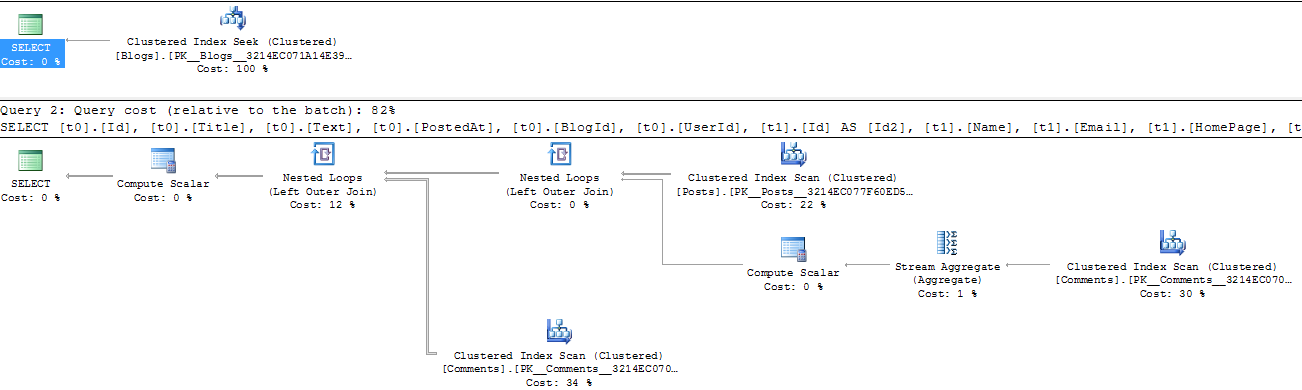

The query plan for this seems overly complicated:

If you’ll look closely, you’ll see that it generate a join between Blogs, Posts and Comments, essentially creating a Cartesian product between all three.

I am not going to offer commentary on the results, but open a discussion on them.