The curse of memory fragmentation

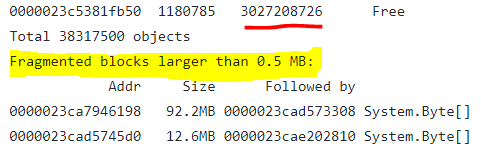

We took a memory dump of a production server that was exhibiting high memory usage. Here are the relevant parts:

You can already see that there is a lot of fragmentation going on. In this case, there are a few things that we want to pay special attention to. First, there are about 3GB of free space and we are seeing a lot of fragmented blocks.

Depending on your actual GC settings, you might be expecting some of it. We typically run with Server mode and RetainVM, which means that the GC will delay releasing memory to the operating system, so in some cases, a high amount of memory in the process isn’t an issue, but you need to see its order. If you are looking at the WinDBG output and seeing hundreds of thousands of fragments, it means that the GC will need to work that much harder when allocating. And it means that it can’t really compact memory and optimize things for higher locality, prevent their promotion to a higher GC gen, etc.

This is also usually the result of pinned memory, typically for I/O or interop. This can cause small buffers that are pinned all over the heap, but most I/O systems are well aware of that and use various tricks to avoid this. Typically by allocating large enough buffers so they would reside in the Large Object Heap, which doesn’t gets compacted very often (if ever). If you are seeing something like this in your application, the first thing to check is the number of pinned buffers and instances you are seeing.

In our case, we intentionally made a change to the system that had the side affect to pin small buffers in memory for a long time, mostly to see how bad that would be. This was to see if we could simplify buffer management somewhat. The answer was that this is quite bad, so we had to manage the buffers more proactively. We allocate a large buffer on the large object heap, then slice it into multiple segments and pool these segments. This way we get small buffers that aren’t wasting a lot of memory, but avoid high memory fragmentation because they have to be pinned for longish periods.

Comments

Comment preview