As part of our 4.0 deployment, we have a cluster made of mixed machines, some running Windows, some running Linux. In one particular configuration, we have 2 Windows services and a single Linux machine. We setup the cluster and started directing production traffic to it, and all was good. We left for the weekend and came back the following week, to see what the results were. Our Linux machine was effectively down. It was not responding to queries and it seemed like it was non responsive.

As part of our 4.0 deployment, we have a cluster made of mixed machines, some running Windows, some running Linux. In one particular configuration, we have 2 Windows services and a single Linux machine. We setup the cluster and started directing production traffic to it, and all was good. We left for the weekend and came back the following week, to see what the results were. Our Linux machine was effectively down. It was not responding to queries and it seemed like it was non responsive.

That was good and bad. It was good because the rest of the cluster just worked, and there was no interruption in service. This is as designed, but it is always nice to see this in real life. It is bad because it isn’t supposed to be happening. What was really frustrating was that we were able to log into the machine and there was nothing wrong there.

No high CPU or memory, no outstanding I/O or anything of the kind that we are used to.

The first clue for us was trying to diagnose the problem from inside the machine, where we able to reproduce it by trying to access the server from the local machine, resulting in the exact same issue as observed externally. Namely, the server would accept the connection and just leave it hanging, eventually timing out.

That was a interesting discovery, since it meant that we can rule out anything in the middle. This is a problem in this machine. But the problem remained quite hard to figure out. We deployed to production in a manner similar to what we expect our users will do, so we used Let’s Encrypt as the certificate authority with auto generated certificates.

So we started by seeing where the problem is, whatever this is on the TCP side or the SSL side, we issued the following command:

openssl s_client -connect b.cluster-name.dbs.local.ravendb.net:443

This command showed immediate connection to the server and the client sending the ClientHello properly, but then just hanging there. What was really interesting is that if we waited about 2 minutes, that SSL connection would complete successfully. But we couldn’t figure out any reason why this would be the case. It occurred to me that it might be related to the system handling of reverse DNS lookup. The two minutes timeout was very suspicious, and I assumed that it might be trying to lookup the client certificate and somehow resolve that. That isn’t how it works in general, although the fact that some SSH (and not SSL/TLS) configuration directly relate to this has led us in a merry chase.

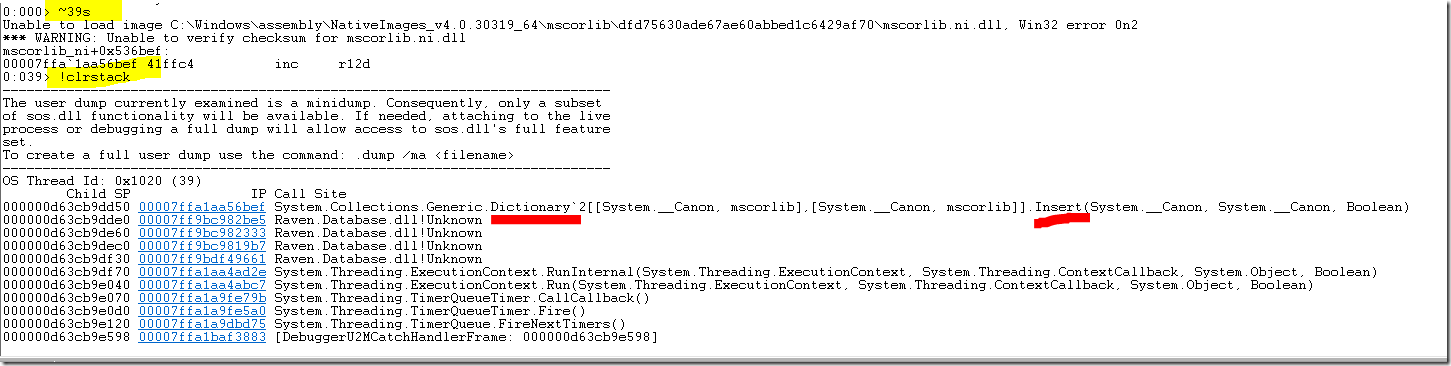

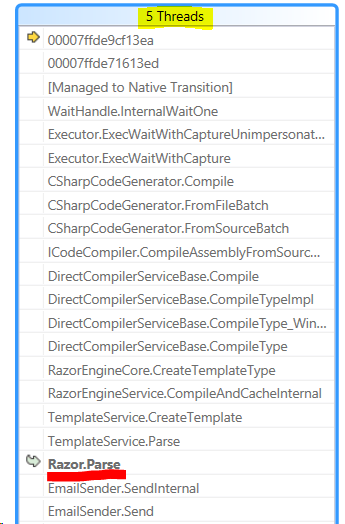

Eventually we pulled strace and looked into what is actually going on. We focused on the following calls:

sudo strace -fp 1017 -s 128 -Tfe open,socket,connect,sendto,recvfrom,write

The interesting bits from there are shown here:

As you can see, we are looking at some DNS resolution, as we can tell from the /etc/resolv.conf and /etc/hosts open() calls. Then we have a connect() to 172.31.0.2 which is an AWS name server. Note that this is done over UDP, as you can see from the SOCK_DGRAM option in the preceding socket() call.

We are getting some data back, and we can see identrust there. And then we see something really suspicious. We have a TCP socket call that goes to 192.35.177.64 on port 80. In other words, this is an HTTP call. What does an HTTP call is doing in the middle of an SSL handshake?

As it turned out, our firewall configuration was blocking outbound connections to port 80. We tested removing that rule and everything came back online and the server was running just fine.

Further inspection revealed that we were calling to: http://apps.identrust.com/roots/dstrootcax3.p7c

And this is where things started to jell together. We are using Let’s Encrypt certificates, and in order to ensure trust, we need to send the full chain to the user. SSL Certificates has the notion of Authority Information Access, which is basically a URL that is registered in the certificate that points to where you can find the certificate that signed this one.

Why is this using HTTP? Because the data that will be fetched is already signed, and it is not a secret. And trying to use HTTPS to fetch it might get us into a loop.

So whenever we had a new SSL connection, we’ll try to connect to IdenTrust to get the full chain to send to the client. The killer here is that if we fail to do so, we’ll send the certificate chain we have (without the missing root), but it will work, since the other side already have this root installed (usually). On Windows, this certificate is installed, so we didn’t see it. On Linux, we didn’t have that certificate installed, so we had to look it up every single time.

The gory details, including dives into the source code are in the GitHub issue. And I do think they are gory. In this case, once we realized what was going on we were able to take steps to handle this. We needed to pre-register the entire chain on the local machine, so any lookup will be able to find it locally, and not do a network call per each SSL connection.

But beyond mutating the global certificate store, there is no real way to prevent that remote call.

Note that this is also true for Windows, although that seems to be implemented much deeper in the stack, and not in managed code, so I wasn’t able to trace where this is actually happening. The really bad thing here is that from the outside, there is no way for us to control or disable this, so this is just something that you have to remember to do when you use certificates, make sure that the entire chain is registered on each machine, otherwise you might have a remote call per connection, or a very long (and synchronous!) hang until the request times out if you are blocking outgoing access.

![8011098[3] 8011098[3]](https://ayende.com/blog/Images/Open-Live-Writer/How-to-deal-with-data-corruption-a-view-_8D3D/8011098[3]_thumb.gif)