A customer called to complain that the indexing times that they were seeing on an index rebuild were very high, and that caused them issues. The customer was kind enough to actually provide us with a duplicate machine of their system, including duplicate data, which made the whole process so much easier. Unlike most scenarios, where we have to poke the logs, the debug endpoints and to try to figure out what is going on in a production system that we can’t really touch without causing downtime, here we had a complete freedom of action during the investigation.

The database in question is in the many tens of GB in size, and like most production databases, it has its own.. gravity, shall we say? Unlike a test data set where you can do something over the entire set and get immediate return, here the problem often was that to reproduce the issue we’ll have to start the action, then wait for ten or twenty minutes for it to pick up steam and actually start exhibiting the problem. But being able to actually run those tests repeatedly was very valuable in both narrowing down on exactly what was going on and how to resolve it.

The problem boiled down to an issue with how we were handling document prefetching. Before I get down into the details of that, let me explain what prefetching is.

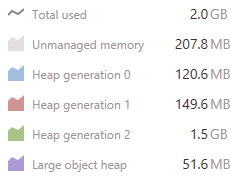

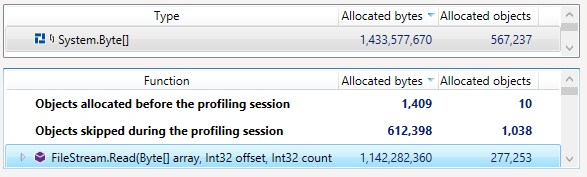

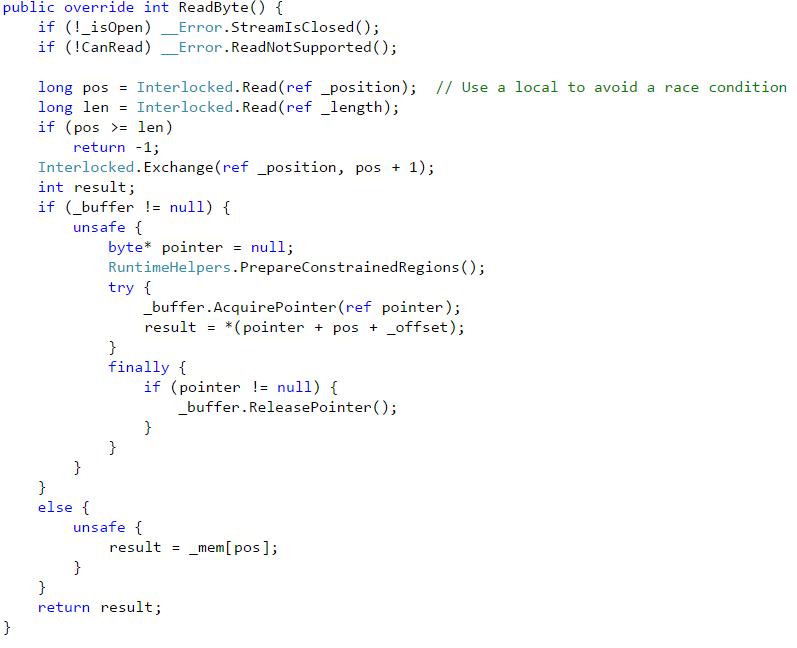

Quite a lot of RavenDB code is concerned with reducing the time a request has to spend waiting for I/O. In particular, creation of a new index require us to read all the documents in the database so we can index them. On large databases, that can mean that we need to read tens of GB (and on very large databases, running an index that cover half a TB is very likely) from disk, index them, then write the index results to disk again.

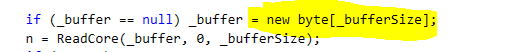

Initially (as in, five or six years ago), we wrote the indexing code like so:

- while (there are documents to index):

- Load a batch of documents

- Index those documents

- Write them to disk

The problem is that this kind of code is very simple an easy to understand, but it also results in spending a lot of time doing:

- Wait for load documents (no CPU usage)

- Index documents (CPU usage)

- Wait to write to disk (no CPU usage)

So a lot of the time was spent just waiting for I/O. Time that could have been much better spent doing something useful.

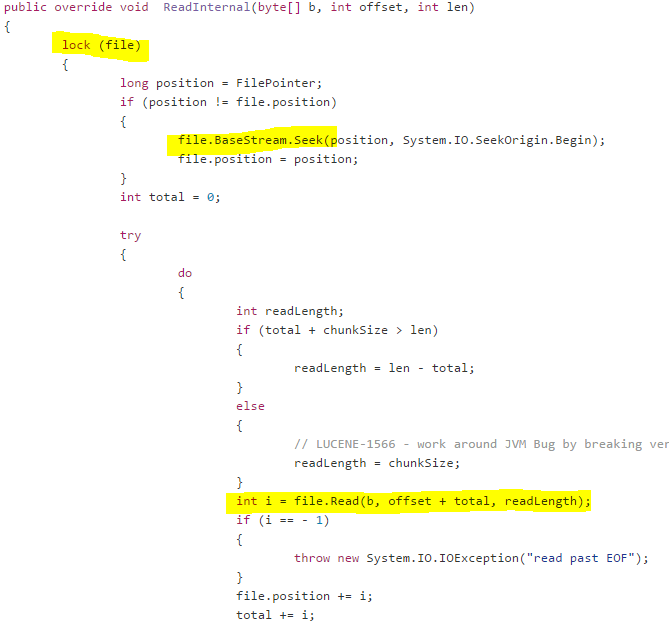

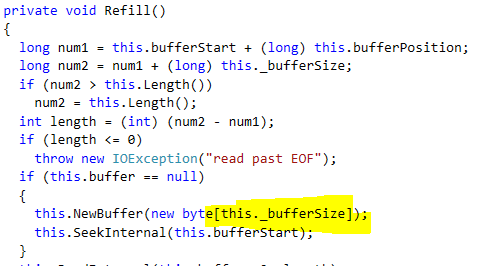

Because of that, we introduced the idea of prefetching. Basically, whenever we finish loading stuff from disk, we also immediately start a background task that will read the next batch of documents to member. The idea is that while we are indexing / writing the index results to disk, we’ll load the next batch of documents to memory, and we’ll have them immediately available to the indexing code, so we’ll have to do less waits, and we get the benefit of parallel I/O and execution.

This is a really high level overview of what is going on there, of course, and we need to balance quite a few competing concerns (memory, I/O pipeline size, I/O speed, other work being done, CPU utilization, etc, etc). But that is a pretty good description.

The problem in this case was that the customer in question have the following pattern of documents:

Our code mostly assumes that you have a roughly uniform distribution of documents sizes. Given the distribution above, assume we have a batch size of 2.

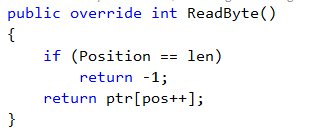

We’ll read the first two documents (taking 25Kb), and then start indexing them. At this time we start loading the next 2 documents. But the msgs/4 document is large, so it takes time to load, which means that indexing is now stalled on I/O.

What is work, the problem exacerbated, since the bigger documents tended to be toward the end (later documents tend to be bigger), it means that our heuristics about the data kept misleading us. Now, to make things worse, we actually do care about the size of the documents that we load, so instead of indexing the documents in big batches, those big documents would cause both I/O stalls, and then cause us to send much smaller batches to the indexes. That means that we have a lot more indexing batches, and a lot more I/O stalls.

The solution was to allow the prefetching code to give the indexes “whatever I have on hand”, and then continue with prefetching the additional documents while the indexes are working. It means more batches, but far less time waiting for the documents to be loaded from disk.

Another change we did was to parallelize the I/O further. When we notice that we get into this kind of situation, instead of firing off a single background task to load the next document batch, we are actually going to spin off multiple prefetching tasks, to load the next few batches in parallel. That means that we put more load on the I/O system, but especially on cloud machines, that is actually a good thing (they they to have a shallow but wide I/O behavior).

Here the ability to actually test those changes on real system was invaluable, because our initial attempt was a bit… too active and actually placed serious I/O strain on the system, because we would try to make a lot of parallel reads for a lot of data at the same time. The implementation that we ended up with knows to scale the amount of pressure we put on the I/O system based on the actual system we use, the (current) I/O throughput we see, the document sizes in recent history, etc.

The end result is that we were able to shave about 20% – 25% of the indexing time under those conditions, and keep the system alive and functioning while we are doing so.

We also introduced the customer to the side by side, which allows them to deploy indexes in production without any interruption in service while the indexing is rebuilding.